Here I’m posting my talk from the 2015 AMIA Conference Opening Plenary. This is intended to be read as fast as possible while in a state of severe anxiety. Thanks #amia15 and good luck #amia40.

Dave

Hi everyone,

Thank you. I’m Dave Rice, an archivist at City University of New York. Last week I got an email from Caroline asking if I would speak to you about AMIA. I don’t know very much at all about baby AMIA or about what sort archivist gatherings led to the conception of baby AMIA. But I can speak about the preteen AMIA that I met in 2001 and try to discern what’s been happening here since and speak about hopes and fears for the future of AMIA. This is actually my 13th AMIA conference so at some time later today I will have participated to half of the AMIA conferences.

Before 2001 no one told me about any of this AMIA stuff. I had a vague awareness of the fact that archives did exist but this was comparable to my awareness that black holes exists. I didn’t have any reason to consider that either black holes or archives were irrational, but also had no awareness of their direct consequence to my life or community. As I explored an interest in silent film I started to ponder such questions as “Why is that there are 20 different versions of the Chaplin Mutual shorts on home video but so many other films aren’t available in any form? And why does so much silent film on home video look terrible and occasionally it doesn’t?” Although I couldn’t directly observe audiovisual archivists I began noticing enough observational evidence to form a hypothesis that they do somehow exist and I wanted to attempt to communicate with one.

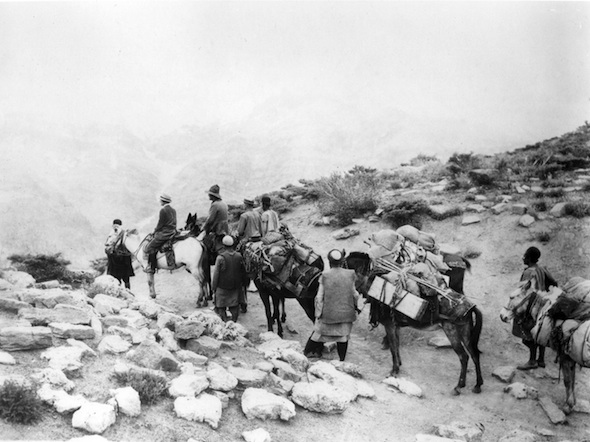

I had a home video of 1925 film “Grass: A Nation’s Battle for Life” from Milestone Films about the migration of a nomadic society so in March 2001 I sent an email from dericed@hotmail.com to MileFilms@aol.com. Presumably it was something like: “Dear MileFilms@aol.com, I am 22 years old. Please tell me the truth, are there audiovisual archivists?”

Dennis Doros kindly replied with acknowledgement that audiovisual archivists are real and provided suggestions which significantly impacted my professional development, including “Look into joining the Association of Moving Image Archivists (AMIA) as they are a very good group. I believe the website is www.amianet.org If not that, do a search and I’m sure you’ll find it.”

That year I started following the AMIA listserv that taught me things like how to try to get the last word in a technical argument and how to unsubscribe from a listserv. I had many questions but found the listserv too intimidating to participate in directly (it still is). Instead I would email individuals offlist with my dumb questions and began to find community in their usually kind and patient responses.

In 2002 I received a small scholarship from the Kodak Student Filmmaker Program that made it possible to attend my first AMIA conference. Attending the first one was eye opening as it demonstrates how professionally diverse AMIA is: similar objectives but significant diversity in the perception of and response to the challenges that affect the field. AMIA is not only diverse in specialty, but also diverse in circumstance. Many attendees are here at the expense of their employer and others have taken days off of work to pay their own way. I have been encouraged with the increased diversity of voices. Today at the conference, note that every panel (excepting one solo) includes a woman presenter.

Within AMIA over the last 25 and next 25 years I think some things will be consistent. Our field will always include elements of despair and paranoia, as there’s either nitrate that won’t wait or the digital dark age. We face decay and loss on all formats, though with different rates and burdens. We’ll continue to struggle with hope as the archival metrics of acquisition, conservation, preservation and decay collide.

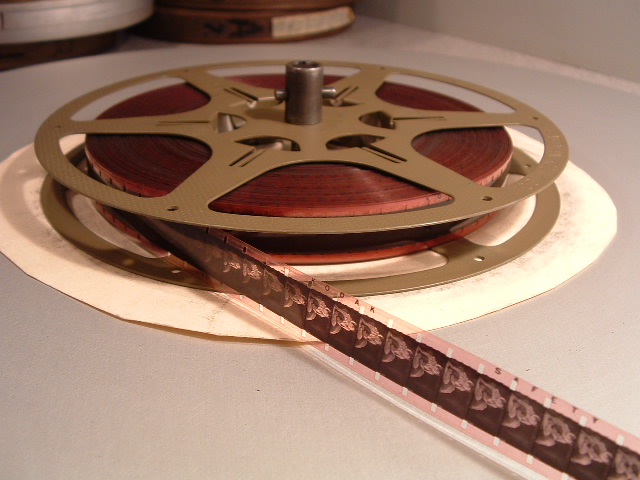

A future AMIA will continue to evolve against the needs of the formats we seek to sustain and use. Already I suspect that a majority of those here do not and will not have the opportunity to work as an archivist of film. A future AMIA will find film, videotape, analog and tape-based digital formats to be less pre-dominant as newer archivists, employing new tactics, join us.

There are concerns of that AMIA’s expertise in film and analog formats is lessening, but I suggest an additional concern that our expertise in digital materials has been slow to expand. A future AMIA will certainly be a more technologically diverse AMIA.

A recent AMIA thread focused on an article that was critical of the role of open source technology in LTO tape storage, something an increasing number of us directly depend on. I was very encouraged John Tariot’s comments in this thread: “I would point to the work of many in AMIA, primarily our younger members, who have wrested away control of digitizing, cataloging, storing, and sharing media from closed, proprietary and expensive systems which, only a few short years ago, were our only options. Hacktivist and open-source culture has made a big change in our field, and should give you reason to hope!”

Personally talking about AMIA present or future is a challenging experience for me. I am happy to come to AMIA and present research or technical findings but for myself and for many here the topic of AMIA is very personal. I have not been a part of the early formation of AMIA but AMIA has been essential in my own professional formation. Having been working within AMIA for 13 years I can no longer claim imposter syndrome but can freely admit that I advocate for change and inclusion within our organization and agree that open-source culture has been a dramatic and personally a welcome change to our field.

I am gratified that we are not a format-specific community; not an association of film archivists nor optical media conservators, but an association focused on the archival challenges of the ever-evolving concept of the moving image in whatever forms it may be carried. We are not the Association of Time Capsule managers; preservation will be increasingly active in a future AMIA.

For the future AMIA I see the balance of conservation vs. migration tipping so that preservation is a much more active and involved endeavor. Film’s conservational powers make it feasible to maintain a collection over a long period of time by paying the electric bill. Digital storage can not and does not seem to try to compete with film’s conservational abilities, but for digital storage most recommend an ongoing series of frequent migrations. Demonstrating to a professional field that is tasked with stabilizing our moving image heritage to move from the stability of film to the perilous digital carriers is somewhat like suggesting to a kingdom to move from their walled city to take up a life as a nomad, that is to leave Castle Film to wonder and to struggle from tent to tent of hard drive to server to data tape.

We have new powers with digital data that we had not with analog media, in that migration is scalable many-to-one rather than one-to-one, more menial work can be done automated as the humans move to new challenges.

I certainly don’t mean to understate the advantages in scale and authenticity that digital moving image archiving can provide, but as archivists we should not play John Henry. We should not deplete our own personal resources to our own professional death in order to compete with and resist the opportunities of digital preservation.

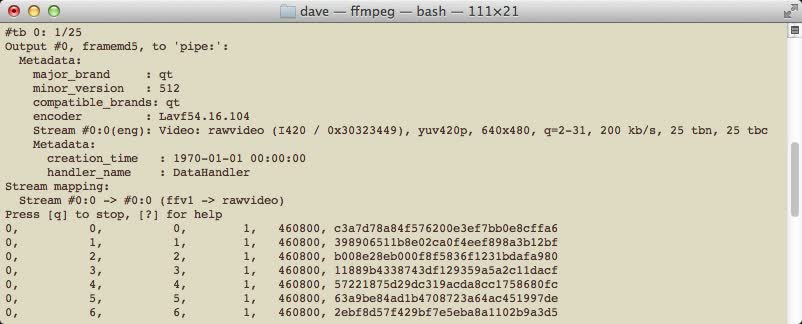

AMIA includes decades of years of refined experience and expertise in many aspects of analog moving image preservation but in some digital areas we are either the newcomers or the late arrivals. We have many tools and tricks to restoring film and analog video but our toolkit for the same with digital media is still in development. Whether unspooling a film through your hands on the bench or deciphering hexadecimal payloads of a QuickTime header, I anticipate that a future AMIA will find that the same objectives and concerns of our digital and analog format may be resolved through unique means that are increasingly perceived as parallels.

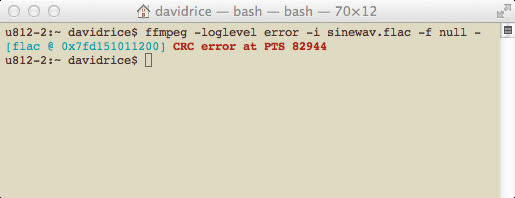

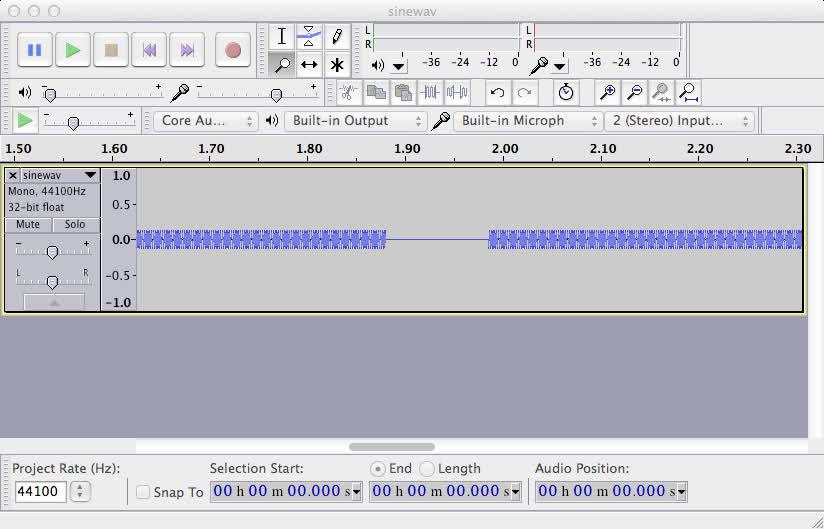

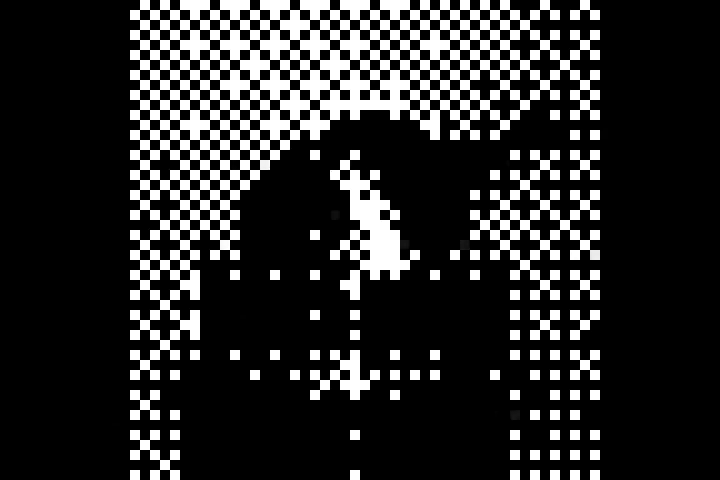

While studying film preservation I was taught that film decays gracefully but that digital counterparts will decay unnoticed up until a point where the data is no longer usable and nothing can be dome. Increasingly we know that this is not true, there exists a way to resolve the same preservation tactics in both data and film. The preservation of the moving image needs AMIA’s expertise, innovation and community in preservation and access no matter what the format of the moving image.

Finally some news, many archivists and projects have been exploring and testing use of the open formats of lossless FFV1 and the Matroska container in preservation context. In fact Indiana University recently announced the selection of FFV1 and Matroska for their large-scale digitization efforts. Many archivists have contributed to the evolution of these open formats and the European Commission funded PREFORMA project enabled the groundwork to propose standardization of these format through an open standards organization, the Internet Engineering Task Force. At 8am this morning the Internet Engineering Steering Group approved the charter, timeline and project proposal for an IETF Working Group called CELLAR standing for Codec Encoding for LossLess Archiving and Realtime transmission. FFV1 and Matroska will be standardized and change control will move from FFmpeg and Matroska to an open standards body.

To close, looking at the future AMIA, I see there are more ways to participate and collaborate on our challenges, there is more technological and personal diversity within the group, with more inclusion, sympathy, and impact amongst us. There are new opportunities for archivists to participate directly in creating solutions rather than adopting them awkwardly from other communities. We have a lot to be hopeful for in a future AMIA. Thanks so much.